|

CAREER: System Research to Enable Practical Immersive Streaming: From 360-Degree Towards Volumetric Video Delivery

|

Abstract

Immersive video technologies allow users to freely explore remote or virtual environments. For example, with 360-degree videos, users can view the scene from any orientation; with volumetric videos, users can control not only the view orientation but also the camera position. Such highly immersive content has applications in entertainment, medicine, education, manufacturing, and e-commerce, to name just a few areas. However, current video streaming infrastructure cannot fully support these emerging formats' high bandwidth, low latency, and high storage requirements. This project aims to address challenges in efficient transmission and storage of immersive video streams by proposing new, efficient representations of this content. These representations can both be adapted to fit users' viewing behaviors and can be generated at the low-latencies required for real-time streaming applications. If successful, the proposed research will enable higher quality immersive streaming than is possible with current systems, further enabling useful immersive streaming experiences.

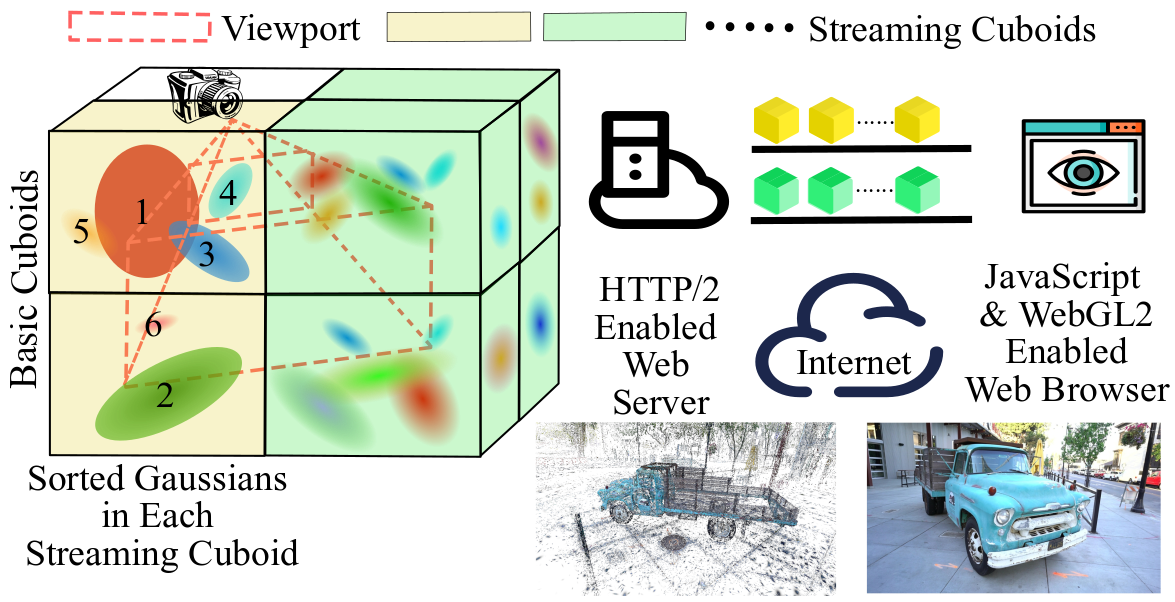

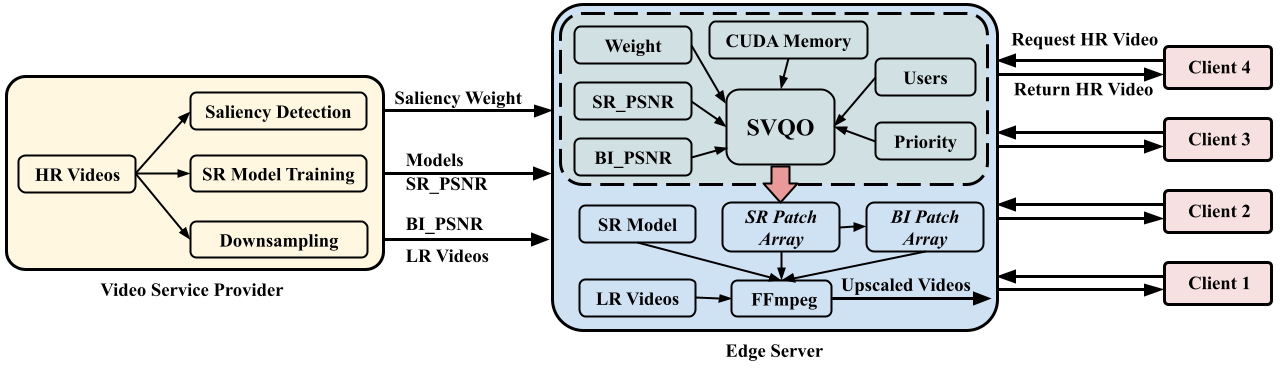

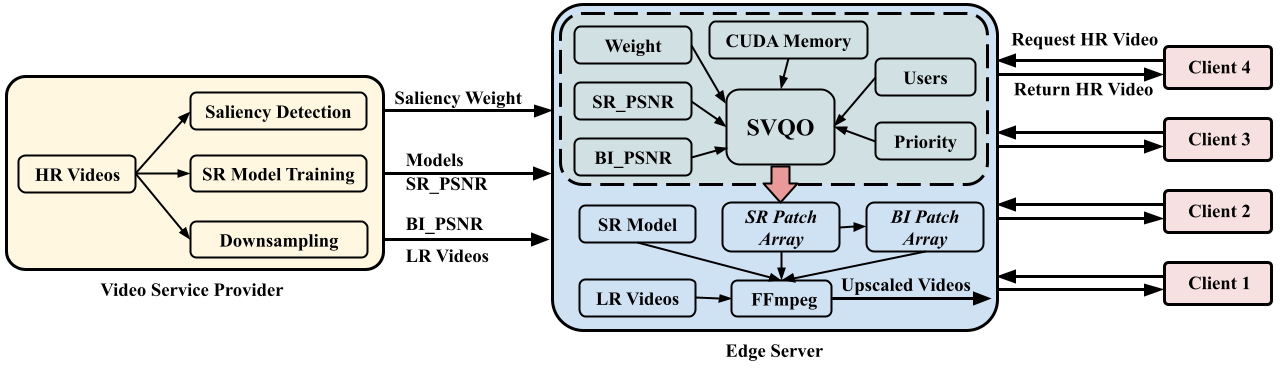

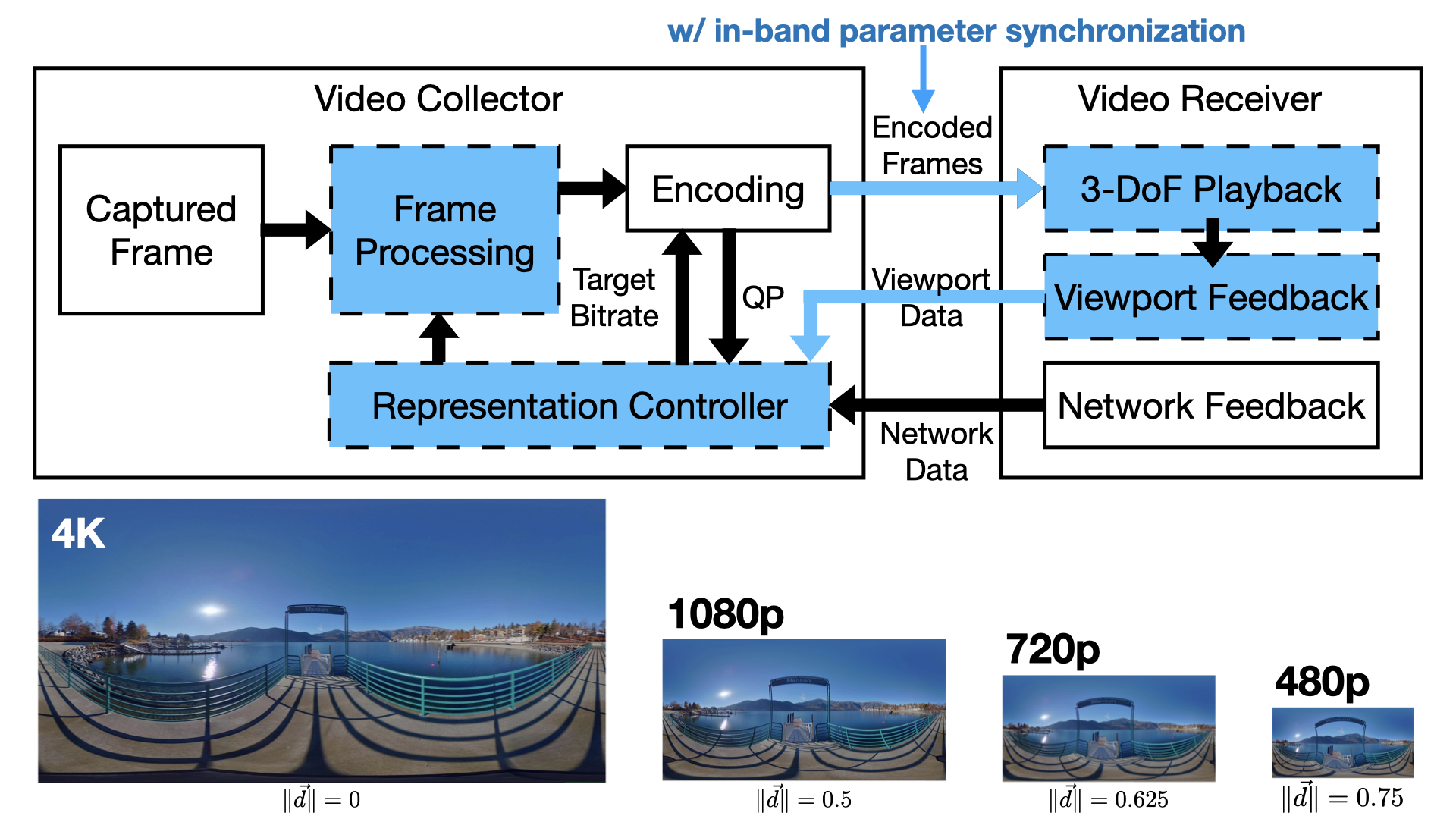

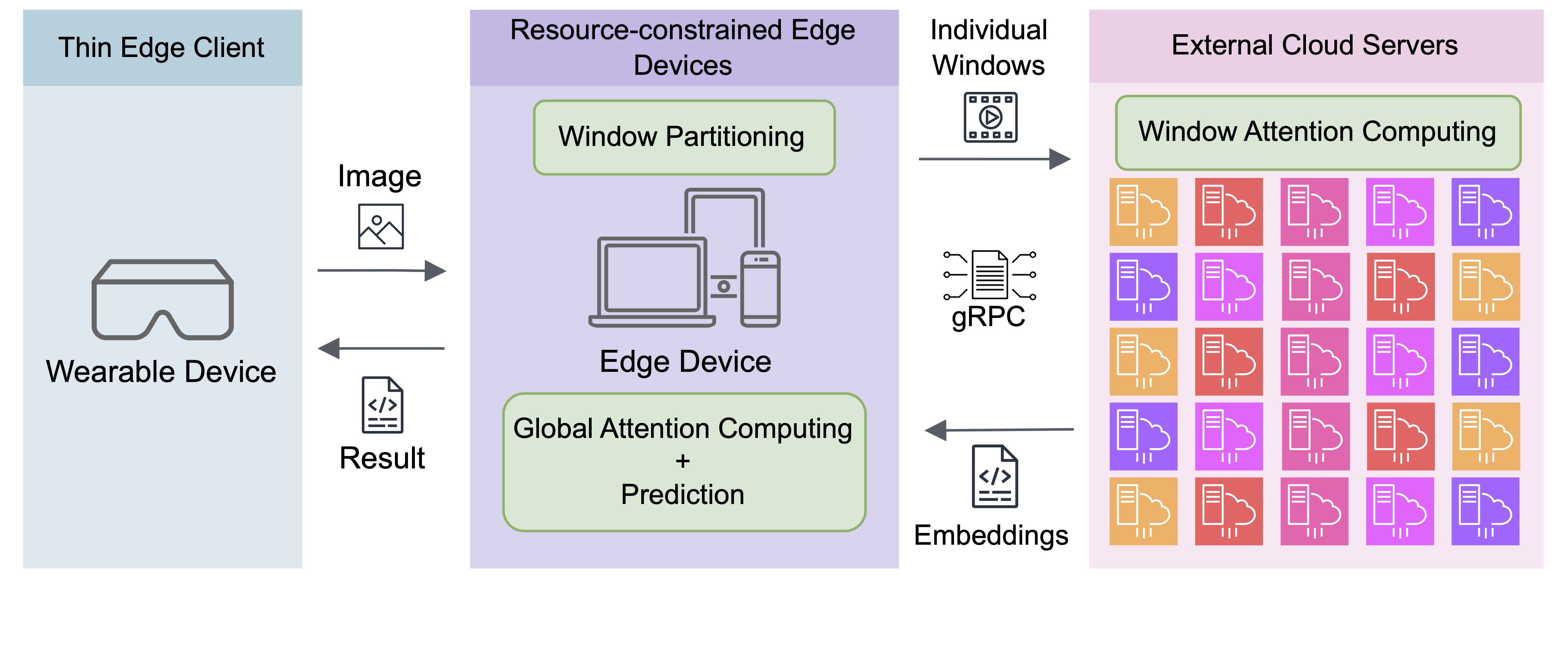

This project investigates techniques for improving efficiency of two specific immersive streaming applications. For real-time 360-degree video, the project will build a system to generate area-of-focus projections in real-time. These area-of-focus projections are selected to align the high-quality focus area with a predicted user view. Low-latency generation is achieved using graphics processing units at nearby edge or cloud servers. For volumetric video streaming, this project aims to create both a storage- and bandwidth-efficient representation of the video. The representation consists of both area-of-focus versions of the video at a selected set of points within the volume as well as patches to cover dis-occluded pixels, allowing high-quality video to be delivered to users positioned anywhere in the scene. The proposed system further uses a Hypertext Transfer Protocol Version 2 (HTTP/2) transmission approach to support bandwidth-efficient delivery of this representation. To more precisely measure the user's true experience of immersive streams, this project will also create immersive video streaming datasets and investigate a new quality metric. This new metric will use novel approaches to correlate user-perceived qualities with visual artifacts introduced due to encoding and network transmission.

Artifacts produced as a result of this project, including publications, code, and datasets, will be made publicly available at https://yaoliu-yl.github.io/ImmersiveStreaming/. These artifacts will be maintained for at least five years after completion of the project.

Datasets

-

👁️EyeNavGS: A 6-DoF Navigation Dataset and Record-n-Replay Software for Real-World 3DGS Scenes in VR

👁️EyeNavGS: A 6-DoF Navigation Dataset and Record-n-Replay Software for Real-World 3DGS Scenes in VR

👁️EyeNavGS is the first publicly available free-world 6-DoF navigation dataset featuring head/eye tracking from 46 participants in 12 real-world 3DGS scenes (using Meta Quest Pro). The 3DGS scenes are corrected for scene tilt and scale for a perceptually-comfortable VR experience.

-

Dynamic 6-DoF Volumetric

Video Generation: Software Toolkit and Dataset

Dynamic 6-DoF Volumetric

Video Generation: Software Toolkit and Dataset

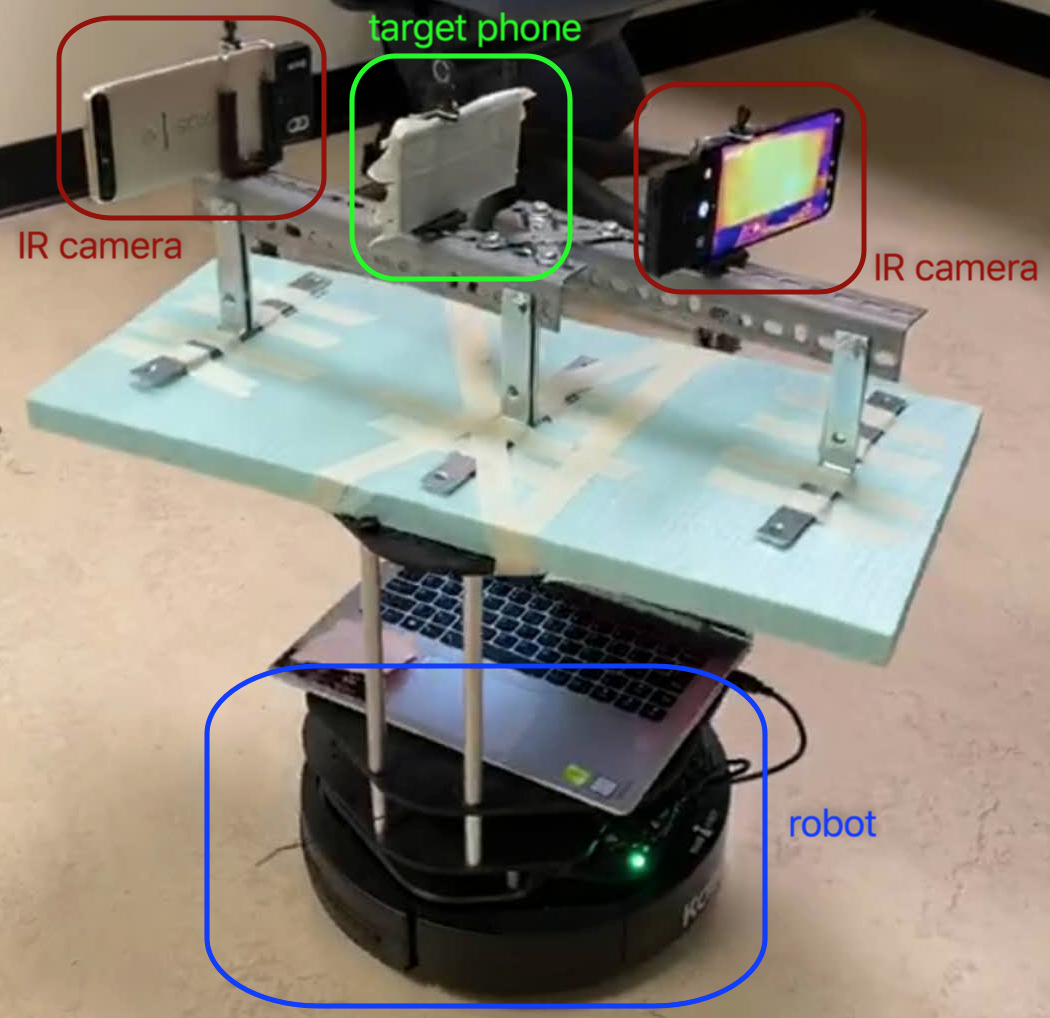

A software toolkit and dataset for dynamic 6-DoF volumetric video generation, supporting research in immersive video. Both the dataset and the software for generating the dataset are available.

Publications